Yesterday, I wrote about the Requirements 101 presentation I gave to my team about what I believe makes for good solution requirements.

(I was not able to limit myself to the 15 minutes I devoted to this as an agenda item because I like the sound of my own voice way too much, but that’s beside the point right now).

The important thing is that I generated some great discussion, which is exactly what I was hoping for. This was not intended to be a lecture, especially given that there are people in my group who are far better at this stuff than I am. The slide above prompted some great input.

I’d argued that the requirement “the monthly transaction report must be available on the next business day after the end of the calendar month” was a bad one, but I was intentionally tricking people. On the face of it there’s nothing wrong with this, and it was a trick because after soliciting feedback on the requirement and getting everybody’s input I only then let them know that the report in question takes 30 hours to generate and therefore, I argued, the requirement was not achievable. I said that issues could have been avoided by having the right people (probably technical SMEs of some description) at the table during the requirements phase of work.

Some people pushed back and said that if this really was a requirement of the hypothetical project to which it’s attached then work would simply have to be undertaken to reduce the time taken to generate the report. If, on the other hand, the project didn’t have the time and/or budget to support this work then that would be a separate issue to a certain extent, and there would be courses of action other than removing the requirement that could be pursued – but that this doesn’t make the requirement any less valid. People argued that it’s hard (if not impossible) to know that you need some additional technical input at this stage of the process without the benefit of hindsight. “You don’t know what you don’t know,” as one person succinctly summed up.

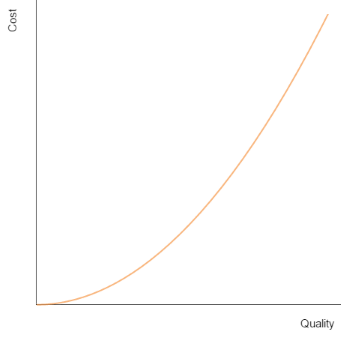

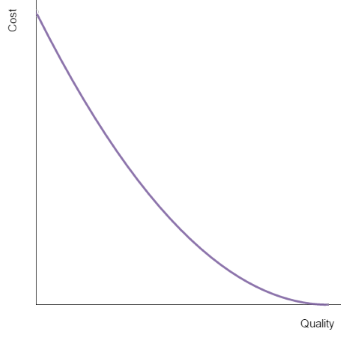

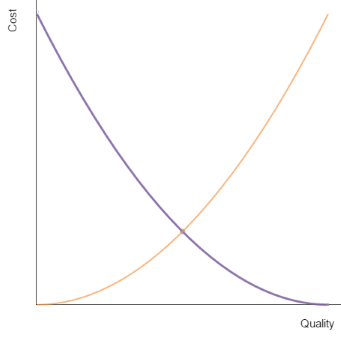

These are excellent points. In fact, often when I’m helping people document their initial requirements for a project I like to tell them (with my tongue firmly in my cheek) that anything is achievable, it will merely come down to how much time and money they have.

My point in including the example in my slide-deck is that I do believe there are opportunities to validate things like this before requirements are finalized and signed-off by stakeholders. If we are able to take advantage of these opportunities to move conversations like this one forwards in the project timeline then it will avoid back-and-forth between business and technical teams, avoid costly rework, and avoid nasty surprises further down the line.

I still feel that’s all true and that my point is a valid one, but of course let’s be realistic – how much effort do we really want to spend validating that each individual requirement is achievable considering every known and as-yet-unknown constraint (bearing in mind that we haven’t even moved in to the “execution” phase of work at this point in our story and the solution hasn’t been designed)? Should we really wait to secure the availability of a highly-sought technical resource to sit in meetings where they will only have minimal input to provide? Wouldn’t it be more efficient to get the stamp of approval in our requirements as-is and move forwards, allowing the solution architects to identify issues like this later (and suggest where compromises or alternative approaches may be necessary or beneficial)?

I suspect the answer – as is so often the case with the questions I pose on this blog – is all about finding an appropriate balance, but I don’t have any solid guidance here for you all.

What are your thoughts?