Ever since I bought my own home nearly a year ago, I’ve

become increasingly interested in making it smart.

Right off the bat, I feel like I should clarify what that

means to me. The ability to turn some lights on or off with an app is not

smart, in my opinion – the smart way of controlling lights is by flicking a

switch conveniently located in the room you wish to illuminate.

A smart home needs to be much more intelligent. It’s about

automation. It’s about the home being able to notify me if something is

happening that I need to know about. It’s about being able to accomplish things

with minimal difficulty, not adding complexity and more steps.

That’s where off the shelf “smart home” solutions really

started to fall down for me. I could spend hundreds or maybe even thousands of

dollars, for what? The ability to turn on my living room lights while I’m still

at the office? Why would I ever need to do that?

Nevertheless, the lack (in my opinion) of a pre-packaged,

useful, holistic solution that accomplished my vision of what a “smart home”

should be didn’t deter me from tackling things bit by bit. It started with our

burglar alarm. It has internet connectivity which sends me alerts in the event

that something unexpected is happening, and lets me arm or disarm the system

from my phone – which I actually do find useful.

Next up was our thermostat. The one that was installed when

we bought the house was an old-fashioned one with a simple mercury switch

inside. You set the temperature, and that was it. We replaced that about a

month ago with something programmable (it doesn’t need to be as warm in here at

night as it does during the day; it doesn’t need to be as warm if nobody’s

home), and I took the opportunity to get one with WiFi so I can set the

temperature remotely. That’s not useful in and of itself, but if you take that

functionality and look at it in the context of my wider vision then the

thermostat is certainly something I’d like to be able to programmatically

control.

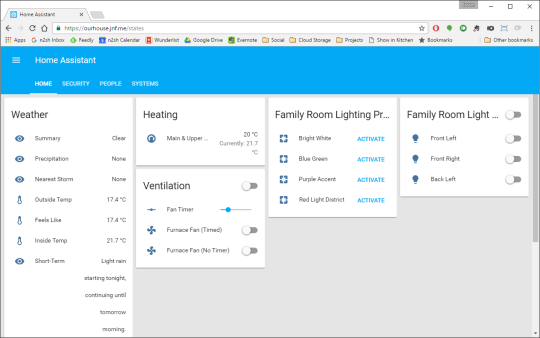

It was around this same time that I discovered home assistant, and now my dream is

starting to come alive.

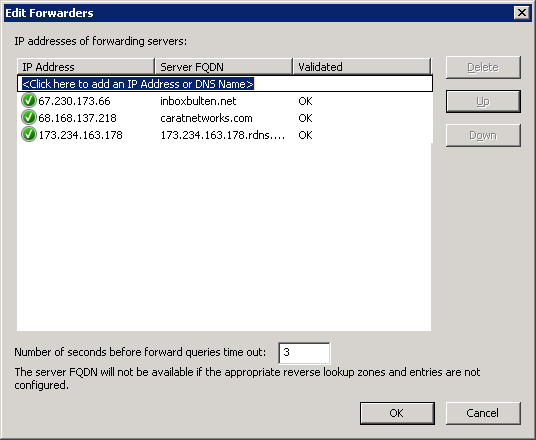

Home Assistant is an open-source project that runs on a

variety of hardware (I was originally running it on a Raspberry Pi, and I’ve

since switched to running it in a Docker

container on our home

server). It has a ton of plugins

(“components”) that enable it to support a variety of products – including our

existing alarm, thermostat, streaming media players, and others (including,

somewhat ironically, the colour-changing lightbulbs we have in our family

room). It includes the ability to create scripts and automations, it uses our

cellphones to know our locations, and can send us push notifications.

My initial setup was all about notifications. If we both

leave the house but the burglar alarm isn’t set then it tells us (and provides

an easy way to fix the issue). If we leave one of the exterior doors open for

more than five minutes, it notifies us (or just one of us, if the other is

out). I also created a dashboard (that you may have seen in my last post) to display some of this stuff on a monitor in my office.

Since installing the thermostat I’ve added more automation.

The time we go to bed isn’t always predictable, but when we do go to bed we set

the alarm. So, if it’s after 7pm and the alarm goes from disarmed to armed, the

thermostat gets put into night mode. If nobody is home then the temperature

gets gradually turned down based on how far away we are.

If nobody is home at dusk then it turns on some lights and

streams talk radio through the family room speakers to give the impression that

someone is.

This stuff meets my definition of smart, and I’m barely

scratching the surface. The open nature of the platform not only means that I’m

not tied to a particular vendor or technology, but also means that I can add on

to the system in a DIY way.

Which is exactly what I’m going to do. I’ve bought some NodeMCU microcontrollers which are

WiFi enabled, Arduino IDE-compatible

development boards designed to the basis for DIY electronics projects.

Watch this space, because over the coming months I’ll be

connecting our doorbell, garage door and laundry appliances to Home Assistant.

I’ll be learning as I go, and I’ll share the hardware and software.